Practical advice

Making sure everything actually works!

Is the expert policy working as intended?¶

Perform full rollins with $\pi^{\star}$.

- Resulting sequences should have $L(S_{final}, \mathbf{y}) = 0$.

- Or close to 0, if happy with suboptimal $\pi^{\star}$.

If expert policy takes too long to calculate during rollout:

- Consider suboptimal alternatives!

- More IL iterations could be more helpful.

Are the roll-outs working as intended?¶

Ensure costs obtained through rollouts are sensible.

- Actions returned by optimal $\pi^{\star}$ should have low cost.

- Equally optimal actions should be costed closely.

If loss rises:

- Adjust transition system.

- Make sure features (latent representations) describe the state and actions adequately.

Avoid cycles¶

Need to prevent cycles between state transitions!

... -> Swap($e_i$, $e_j$) -> Swap($e_j$, $e_i$) -> ...</ul>

Cycles can be controlled in either:

- Transition system, or

- loss function.

Identify problems in your task¶

Suboptimal expert policy?

- Use rollouts that mix expert and classifier.

Errors in rollin introducing noise to the feature vectors?

- Try sequence correction.

Too many actions to rollout?

- Try targeted exploration.

Irrelevant errors in rollouts affect costing?

- Try focused costing.

Noisy training instances?

- Try $a$-bound noise reduction.

Summary¶

Discussed expert policy definitions:

- Static vs. dynamic vs. suboptimal policies.

Examined variations to exploration:

- Early termination, and targeted and partial exploration.

Showed how we can reduce noise in the transition sequence.

- $\alpha$-bound and focused costing.

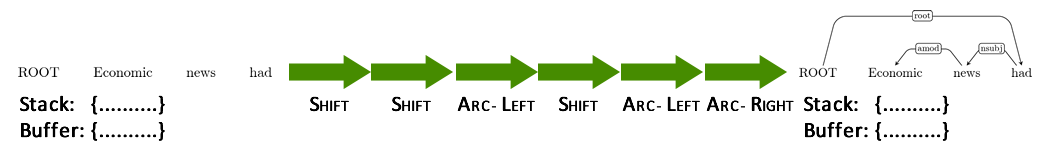

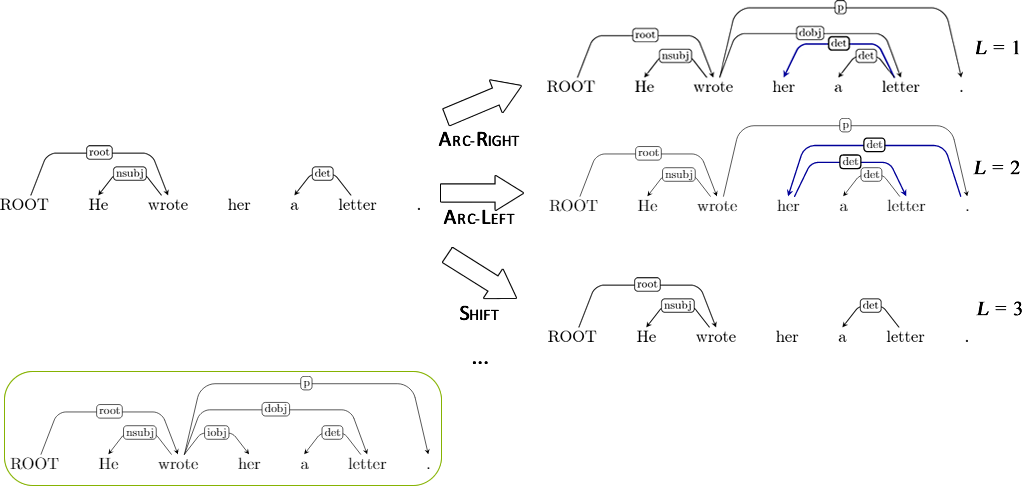

Applying Imitation Learning on various NLP tasks.

- Transitions, loss functions, expert policies.

- And improved on results!

Annotated bibliography¶

PhD theses¶

Hal Daumé III, 2006: Practical Structured Learning Techniques for Natural Language Processing

Stéphane Ross, 2013: Interactive Learning for Sequential Decisions and Predictions

Pieter Abbeel, 2008: Apprenticeship Learning and Reinforcement Learning with Application to Robotic Control

Papers¶

Abeel and Ng, 2004: Apprenticeship Learning via Inverse Reinforcement Learning (inverse reinforcement learning)

Viera and Eisner, 2016: Learning to Prune: Exploring the Frontier of Fast and Accurate Parsing (LOLS with random expert=RL, changeprop)

Goldberg and Nivre, 2012: A Dynamic Oracle for Arc-Eager Dependency Parsing (DAgger for dependency parsing)

Ballesteros et al., 2016: Training with Exploration Improves a Greedy Stack LSTM Parser (DAgger for LSTM-based dependency parsing)

Clark and Manning, 2015: Entity-Centric Coreference Resolution with Model Stacking

Papers¶

Lampouras and Vlachos 2016: Imitation learning for language generation from unaligned data (LoLS for natural language generation, sequence correction).

Goodman et al. 2016: Noise reduction and targeted exploration in imitation learning for Abstract Meaning Representation parsing (V-DAgger for semantic parsing, targeted exploration).

Vlachos and Craven, 2011: Search-based Structured Prediction applied to Biomedical Event Extraction (SEARN for biomedical event extraction, focused costing)

Berant and Liang, 2015: Imitation Learning of Agenda-based Semantic Parsers

Ranzato et al., 2016: Sequence Level Training with Recurrent Neural Networks (Imitation learning for RNNs, learns a cost estimator instead of using roll-outs)

Papers¶

Daumé III et al., 2009: Search-based structured prediction (SEARN algorithm)

Daumé III, 2009: Unsupervised Search-based Structured Prediction (Unsupervised structured prediction with SEARN)

Chang et al., 2015: Learning to search better than your teacher (LoLS algorithm, connection with RL)

Ho and Ermon, 2016: Generative Adversarial Imitation Learning (Connection with adversarial training)

He et al., 2012: Imitation Learning by Coaching (coaching for DAgger)